Just yesterday, I was talking about how Apple’s new AI-powered transcription and summarization feature for voice memos on iPhone is one of the features Rabbit promised on the R1 for later this year, and that gives us one less reason to buy the device.

Today, at Google I/O, the company showed off Project Astra, a multimodal AI agent designed to help you. It takes audio, pictures, and video input and is supposed to respond to your queries and follow-up questions precisely. The company claims its real USP lies in the feature working in real time and bring “response time down to conversational level”.

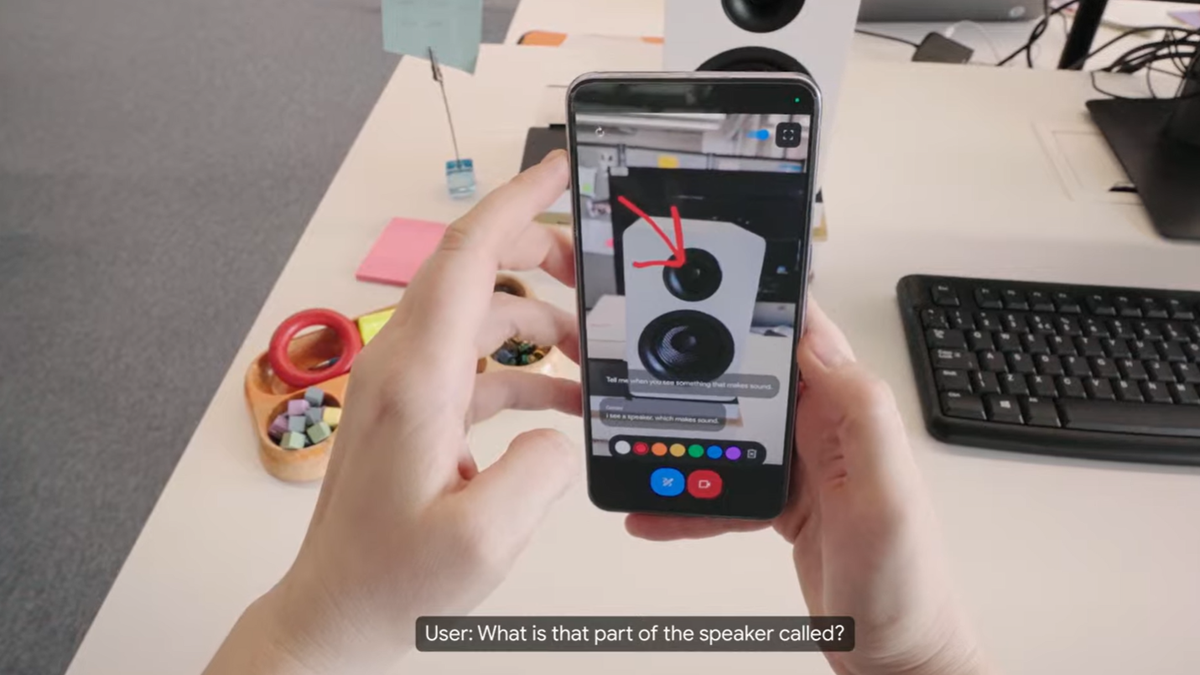

The keynote showed a woman walking around her workplace and asking her Google phone to identify a specific part of the speaker. The phone promptly correctly identified it as the tweeter. The woman asked it a bunch of other questions and even asked it to tell her where it had last seen her misplaced glasses.

Google touted the whole “Just Ask” functionality multiple times during the keynote. They’re trying to market the new Gemini AI features to provide everything you need without asking. Another new addition is the Side Panel feature that will appear on your Gmail and Google Docs to help save you time on those services. It can summarize a long email thread, tell you the key points in a one-hour-long presentation, or summarize a PDF.

The presentation showed an example where the Side Panel user asked it to analyze all the receipts in the emails sent within the last 30 days and put some of its contents (vendor, date, etc.) on a spreadsheet. The keynote speaker mentioned that we’d be able to fully automate this action so that our spreadsheet updates alongside our Gmail. This is one of the R1’s biggest promises for late 2024 and the feature they’ve been most vocal about.

I’ve said it once and I’ll say it again: dedicated AI devices will be practically useless as soon as phones get smart enough to offer the same functionality (which will happen sooner than we think).