A new research paper out of OpenAI Thursday says a superhuman AI is coming, and the company is developing tools to ensure it doesn’t turn against humans. OpenAI’s Chief Scientist, Ilya Sutskever is listed as a lead author on the paper, but not the blog post that went with it, and his role at the company remains unclear.

“We believe superintelligence—AI vastly smarter than humans—could be developed within the next ten years,” said OpenAI in a blog post. “Figuring out how to align future superhuman AI systems to be safe has never been more important, and it is now easier than ever to make empirical progress on this problem.”

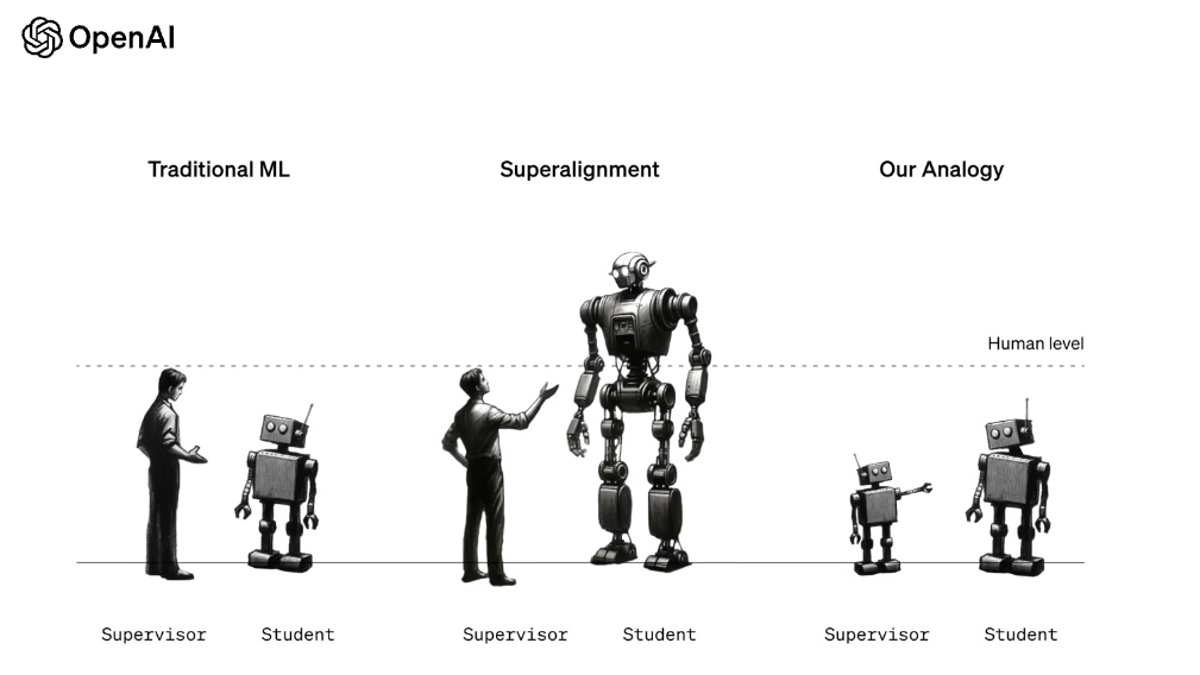

“Weak-to-strong generalization” is the first paper from Ilya Sutskever and Jan Leike’s “Superalignment” team, created in July to make sure AI systems much smarter than humans will still follow human rules. The proposed solution? OpenAI needs to design small AI models to teach superhuman AI models.

Currently, OpenAI uses humans to “align” ChatGPT by giving it good feedback or negative feedback. That’s how OpenAI ensures ChatGPT won’t give people instructions on how to build napalm at home, or other dangerous outcomes. As ChatGPT gets smarter, however, OpenAI acknowledges humans won’t be sufficient to train these models, so we need to train a less complicated AI to do so on our behalf.

This is the first sign of life from Ilya Sutskever at OpenAI since Sam Altman announced nearly everyone was back at OpenAI while leaving Sutskever’s status up in the air. Sutskever, a cofounder of OpenAI, was one of the board members behind Altman’s firing, and he’s a leading voice in the AI community for responsible AI deployment. He’s no longer leading the company forward but he still may have a role there.

The other leader of OpenAI’s Superalignment team, Leike, gave kudos to Ilya for “stoking the fires” on Thursday, but commended others for “moving things forward every day.” The comments raise a question of whether Sutskever started this project, but wasn’t around to finish it.

He’s reportedly been invisible at the company in the last few weeks and has hired a lawyer, sources told Business Insider. Jan Leike and other members of OpenAI’s Superalignment team made public statements on this landmark paper, but Sutskever hasn’t said a word—just retweets.

The study found that training large AI models with smaller AI models, what they call “weak-to-strong generalization” results in a higher level of accuracy in multiple circumstances, compared to human training. Much of the study used GPT-2 to train GPT-4. It’s important to note that OpenAI says it is not convinced this is a “solution” to superalignment, this is merely a promising framework for training a superhuman AI.

“Broadly superhuman models would be extraordinarily powerful and, if misused or misaligned with human values, could potentially cause catastrophic harm,” said OpenAI researchers in the study, noting that it’s been unclear how to empirically study this topic. “We believe it is now easier to make progress on this problem than ever before.”

There was significant speculation that OpenAI was close to AGI in November, though much of it was inconclusive. This paper confirms that OpenAI is actively building tools to control an AGI, but does not confirm the existence of an AGI. As for Ilya Sutskever, his status remains in a strange limbo. OpenAI has not confirmed his position at the company, but his team just quietly put out groundbreaking research as he retweets their corporate announcements.