In the aftermath of last week’s shocking OpenAI power struggle, there was one final revelation that acted as a kind of epilogue to the sprawling mess: a report from Reuters that revealed a supposedly startling breakthrough at the startup. That breakthrough allegedly happened via a little known program dubbed “Q-Star” or “Q*.”

According to the report, one of the things that may have kicked off the internecine conflict at the influential AI company was this Q-related “discovery.” Ahead of Altman’s ouster, several OpenAI staffers allegedly wrote to the company’s board about a “powerful artificial intelligence discovery that they said could threaten humanity.” This letter was “one factor among a longer list of grievances by the board leading to Altman’s firing,” Reuters claimed, citing anonymous sources.

Frankly, the story sounded pretty crazy. What was this weird new program and why did it, supposedly, cause all of the chaos at OpenAI? Reuters claimed that the Q* program had managed to allow an AI agent to do “grade-school-level math,” a startling technological breakthrough, if true, that could precipitate greater successes at creating artificial general intelligence, or AGI, sources said. Another report from The Information largely reiterated many of the points made by the Reuters article.

Still, details surrounding this supposed Q program haven’t been shared by the company, leaving only the anonymously sourced reports and rampant speculation online as to what the true nature of the program could be.

Some have speculated that the program might (because of its name) have something to do with Q-learning, a form of machine learning. So, yeah, what is Q-learning, and how might it apply to OpenAI’s secretive program?

In general, there are a couple different ways to teach an AI program to do something. One of these is known as “supervised learning”, and works by feeding AI agents large tranches of “labelled” data, which is then used to train the program to perform a function by itself (typically that function is more data classification). By and large, programs like ChatGPT, OpenAI’s content-generating bot, were created using some form of supervised learning.

Unsupervised learning, meanwhile, is a form of ML wherein AI algorithms are allowed to sift through large tranches of unlabeled data, in an effort to find patterns to classify. This kind of artificial intelligence can be deployed to a number of different purposes, such as creating the kind of recommendation systems that companies like Netflix and Spotify use to suggest new content to users based on their past consumer choices.

Finally, there’s reinforced learning, or RL, which is a category of ML that incentivizes an AI program to achieve a goal within a specific environment. Q-learning is a subcategory of reinforced learning. In RL, researchers treat AI agents sort of like a dog that they’re trying to train. Programs are “rewarded” if they take certain actions to affect certain outcomes and are penalized if they take others. In this way, the program is effectively “trained” to seek the most optimized outcome in a given situation. In Q-learning, the agent apparently works through trial and error to find the best way to go about achieving a goal its been programmed to pursue.

What does this all have to do with OpenAI’s supposed “math” breakthrough? One could speculate that the program that managed (allegedly) to do simple math operations may have arrived at that ability via some form of Q-related RL. All of this said, many experts are somewhat skeptical as to whether AI programs can actually do math problems yet. Others seem to think that, even if an AI could accomplish such goals, it wouldn’t necessarily translate to broader AGI breakthroughs. The MIT Technology review, speaking with :

Researchers have for years tried to get AI models to solve math problems. Language models like ChatGPT and GPT-4 can do some math, but not very well or reliably. We currently don’t have the algorithms or even the right architectures to be able to solve math problems reliably using AI, says Wenda Li, an AI lecturer at the University of Edinburgh. Deep learning and transformers (a kind of neural network), which is what language models use, are excellent at recognizing patterns, but that alone is likely not enough, Li adds.

In short: We really don’t know much about Q, though, if the experts are to be believed, the hype around it may be just that—hype.

Question of the day: Seriously, what the heck happened with Sam Altman?

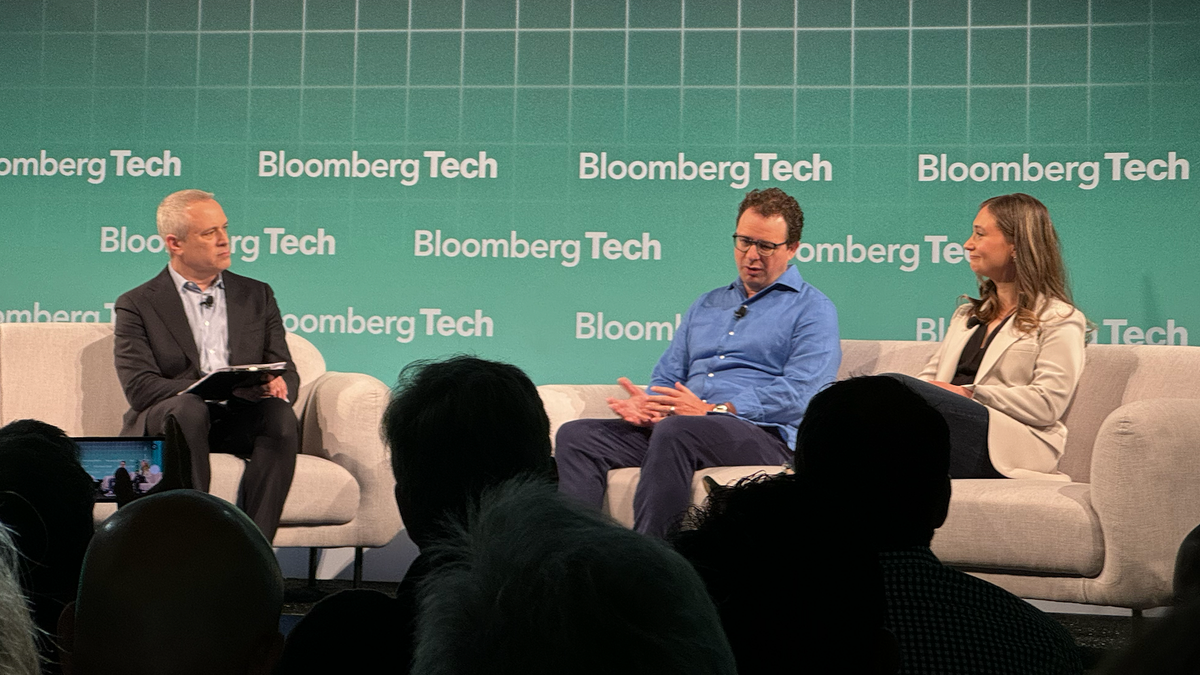

Despite the fact that he’s back at OpenAI, it bears some consideration that we still don’t know what the fuck happened with Sam Altman last week. In an interview he did with The Verge on Wednesday, Altman gave pretty much nothing away as to what precipitated the dramatic power struggle at his company last week. Despite continual prodding from the outlet’s reporter, Altman just sorta threw up his hands and said he wouldn’t be talking about it for the foreseeable future. “I totally get why people want an answer right now. But I also think it’s totally unreasonable to expect it,” the rebounded CEO said. Instead, the most The Verge was able to get out of the OpenAI executive is that the company is in the midst of conducting an “independent review” into what happened—a process that, he said, he doesn’t want to “interfere” with. Our own coverage of last week’s shitshow interpreted it according to a narrative involving a clash between the board’s ethics and Altman’s dogged push to commercial OpenAI’s automated technology. However, this narrative is just that: a narrative. We don’t know the specific details of what led to Sam’s ousting, though we sure would like to.

Other headlines this week

- Israel is using AI to identify suspected Palestinian militants. If you were worried that governments would waste no time in weaponizing AI for use in modern warfare, listen to this. A story from The Guardian shows that Israel is currently using an AI program that it’s dubbed Habsora or, “The Gospel” to identify apparent militant targets within Palestine. The program is used to “produce targets at a fast pace” a statement posted to the Israeli Defense Forces website apparently reads, and sources told The Guardian that the program has helped the IDF to build a database of some 30,000 to 40,000 suspected militants. The outlet reports: “Systems such as the Gospel…[sources said] had played a critical role in building lists of individuals authorised to be assassinated.”

- Elon Musk weighed in on AI copyright issues this week and, as per usual, sounded dumb. Multiple lawsuits have argued that tech companies are essentially stealing and repackaging copyrighted material, allowing them to monetize other people’s work (typically authors and visual artists) for free. Elon Musk waded into this contentious conversation during his weird-ass Dealbook interview this week. Naturally, the thoughts he shared sounded less than intelligible. He said, and I quote: “I don’t know, except to say that by the time these lawsuits are decided we’ll have Digital God. So, you can ask Digital God at that point. Um. These lawsuits won’t be decided on a timeframe that’s relevant.” Beautiful, Elon. You just keep your eyes out for that digital deity. Meanwhile, in the real world, legal and regulatory experts will have to contend with the disruptions this technology is continually causing for people way less fortunate than the Silicon Valley C-suite.

- Cruise robotaxis continue to struggle. Cruise, the robotaxi company owned by General Motors, has been having a really tough year. Its CEO stepped down last week, following a whirlwind of controversy involving the company’s various mishaps in San Francisco. This week, it was reported that GM would be scaling back its investments in the company. “We expect the pace of Cruise’s expansion to be more deliberate when operations resume, resulting in substantially lower spending in 2024 than in 2023,” GM CEO Mary Barra reportedly said at an investor conference Wednesday.