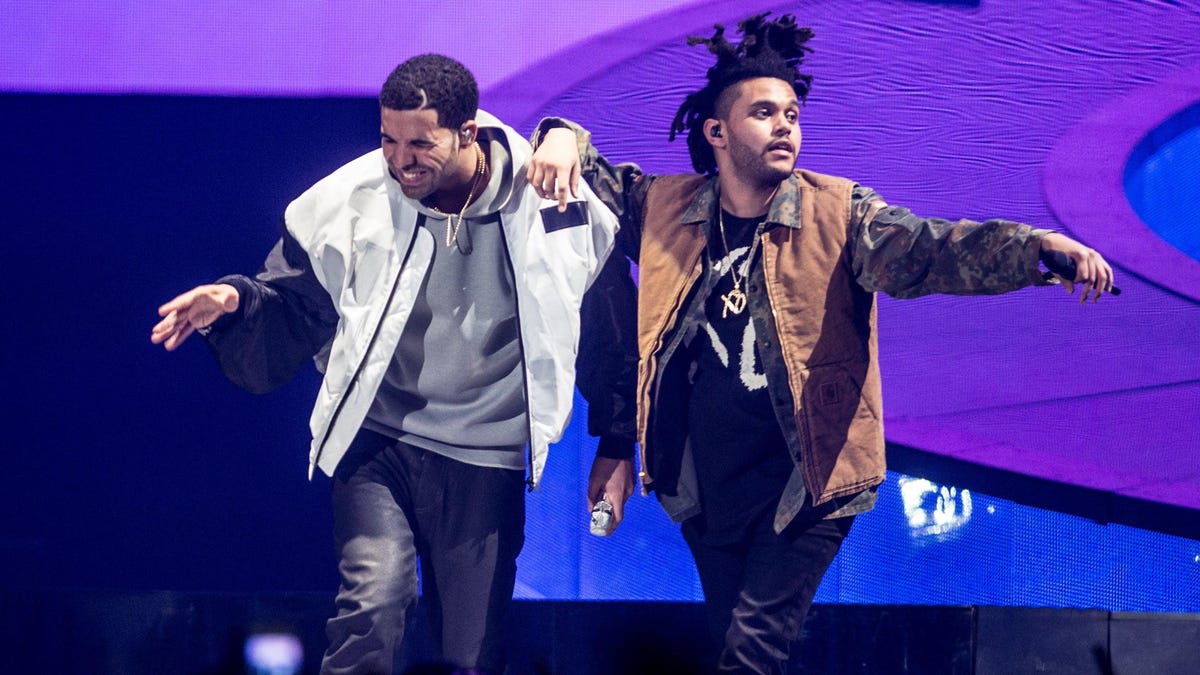

YouTube released new policy updates on Tuesday to combat AI-generated content that resembles the voice and style of music artists. Earlier this year, “Heart on My Sleeve,” a song that sounds like a track made by Drake and The Weeknd, created with generative AI, garnered over 600,000 plays and was even considered for a Grammy nomination.

“We’re also introducing the ability for our music partners to request the removal of AI-generated music content that mimics an artist’s unique singing or rapping voice,” said YouTube in a blog post.

Music created with generative AI shocked the world with songs that are genuinely good and difficult to differentiate from the real thing. One fan bought an AI-generated Frank Ocean song for more than $4,000 on Discord, not knowing it was a dupe. YouTube is trying to address this problem head-on, however, it seems they still might lack the actual tools to do so effectively.

YouTube will consider whether news outlets are reporting on generative AI songs, as well as analysis or critique of the synthetic vocals in its determination of whether to take down a song. The video platform will also require creators to disclose when they’ve created altered content using AI. These disclosures will appear on YouTube videos in the form of content labels.

The problem with these strategies is that a lot of responsibility falls on what the masses are saying. Waiting for news outlets to report on a song created with generative AI, or for analysts and critics to head to social media, likely means the song has already gone mainstream. Requiring creators to label their content, just like Adobe added for AI-generated photos, relies heavily on good-faith actors, and may not work in practice.

“We also recognize that bad actors will inevitably try to circumvent these guardrails,” YouTube said. “We’ll incorporate user feedback and learning to continuously improve our protections.”

The new policies seem like a reversal of course for YouTube. The company reportedly developed a tool to license out an artist’s voice from a music label, and let users make songs that sound just like them. However, it seems talks with labels like Sony Music and UMG never solidified, and the company may be headed in the opposite direction on AI-generated music.

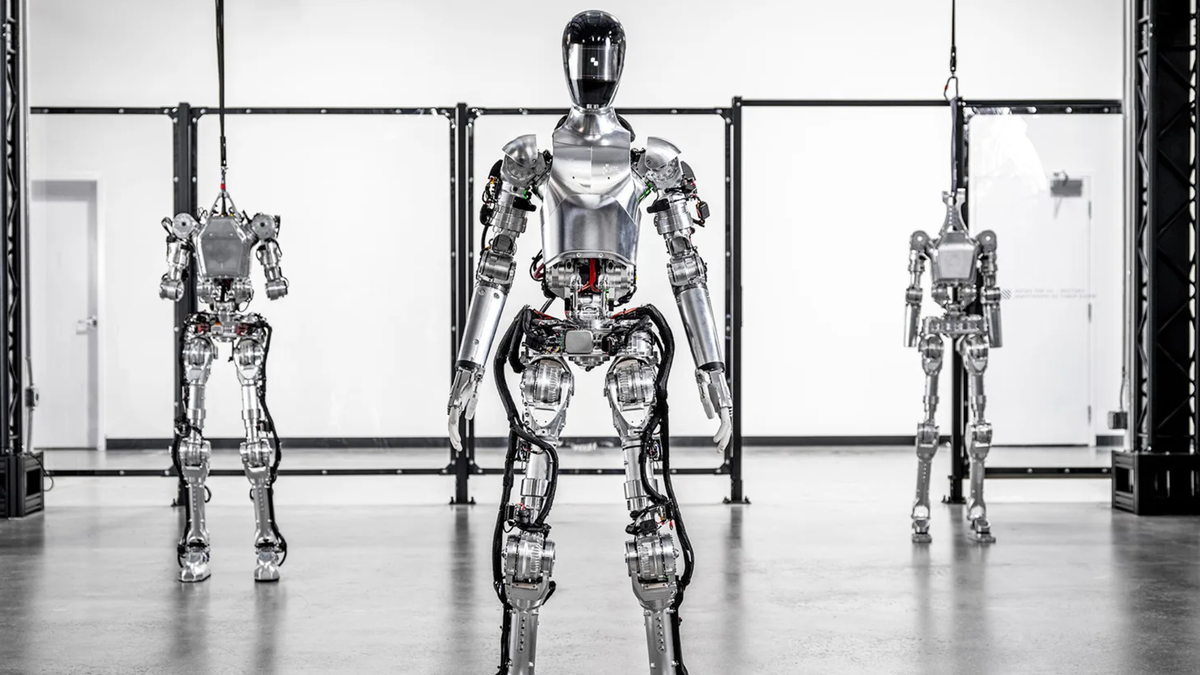

YouTube is also deploying AI classifiers to help a team of 20,000 reviewers across Google to enforce its Community Guidelines. The company says AI is continuously increasing both the speed and accuracy of its content moderation.