As advances in robotics and artificial intelligence continue, efforts to make robots less creepy and unsettling and more friendly and human-like are a coveted niche of development. Now, federally funded researchers claim to have created a robot that can mimic the facial expressions of the person that it is talking to.

In a recently published paper, researchers working under a grant from the National Science Foundation explained how they built their newest robot, “Emo,” and the efforts they took to make the machine more responsive to human interactions. At the beginning of the paper, the researchers note the importance of the human smile in social mirroring:

Few gestures are more endearing than a smile. But when two people smile at each other simultaneously, the effect is amplified: Not only is the feeling mutual but also for both parties to execute the smile simultaneously, they likely are able to correctly infer each other’s mental state in advance.

Researchers’ new robot is an “anthropomorphic facial robot.” This is not their first robot. A previous iteration of the bot was dubbed “Eva.” However, researchers say that Emo is notably more advanced than Eva.

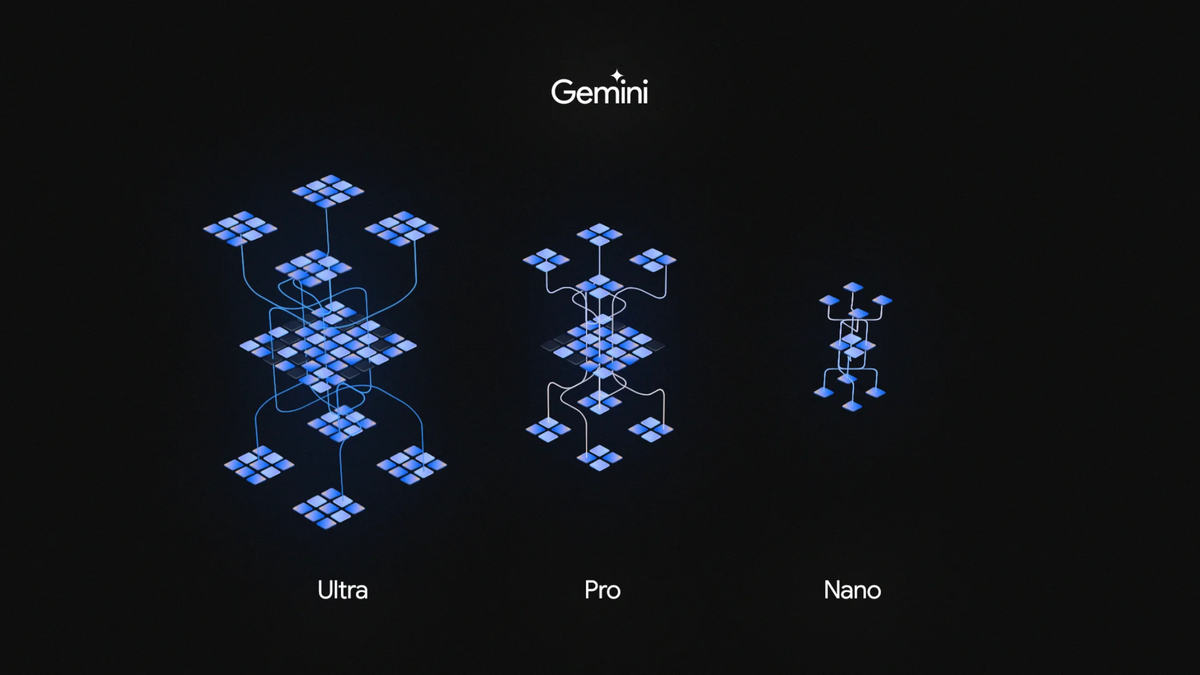

The goal with Emo was to achieve what researchers call “coexpression,” or the simultaneous emulation of a human conversant’s facial expression. To accomplish that, researchers developed a predictive algorithm that was trained on a large video dataset of humans making facial expressions. Researchers claim that the algorithm they’ve developed is able to “predict the target expression that a person will produce on the basis of just initial and subtle changes in their face.” The algorithm then communicates to the robot’s hardware what facial configuration to emulate in “real-time,” researchers say.

Actually getting the robot to make a facial expression is a different story. The robot’s various facial configurations are powered by 26 different motors and 26 actuators. Researchers say that the majority of the motors are “symmetrically distributed”—meaning that they are designed to create symmetrical expressions—with the exception of the one that controls the robot’s jaw. Three motors control the robot’s neck movements. The actuators, meanwhile, allow for the creation of more unsymmetrical expressions.

Emo also comes equipped with interchangeable silicone “skin” that is attached to the robot’s face using 30 magnets. Researchers note that this skin can be “replaced with alternative designs for a different appearance and for skin maintenance,” making the face customizable. The robot also has “eyes,” which are equipped with high-resolution RGB cameras. These cameras allow Emo to see his conversation participant and thus emulate their facial expression.

At the same time, researchers write that they deploy what they call a “learning framework consisting of two neural networks—one to predict Emo’s own facial expression (the self-model) and another to predict the conversant’s facial expression (the conversant model).” When you put the software and hardware together what you get is a machine that can do stuff like this:

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547426/THBY_S3_UT_308_210825_SAVJAS_00055_1.JPG)